[Blog Operator] 블로그 Logging 하기

![[Blog Operator] 블로그 Logging 하기](/content/images/size/w1200/2025/01/20250131_193018.png)

Issue

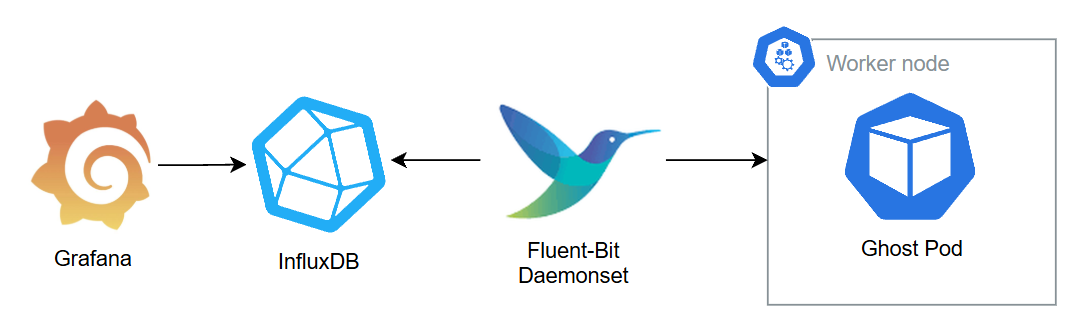

1월 31일 새벽 4시쯤 예상치 못한 부하가 갑자기 발생하였는데, 이때 Application의 로그를 Lens나 kubectl logs 와 같은 명령어로 보다보니 로깅 관리하기가 힘들어 아래와 같이 Grafana + fluent-bit 조합을 통해 간편하게 로그 조회를 하기 위해 구축하고자한다.

구축

우선 로그를 저장할 Time Series Database인 InfluxDB를 설치해준다.

$ wget -q https://repos.influxdata.com/influxdata-archive_compat.key

$ echo '393e8779c89ac8d958f81f942f9ad7fb82a25e133faddaf92e15b16e6ac9ce4c influxdata-archive_compat.key' | sha256sum -c && cat influxdata-archive_compat.key | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/influxdata-archive_compat.gpg > /dev/null

$ echo 'deb [signed-by=/etc/apt/trusted.gpg.d/influxdata-archive_compat.gpg] https://repos.influxdata.com/debian stable main' | sudo tee /etc/apt/sources.list.d/influxdata.list

$ sudo apt-get update && sudo apt-get install influxdb

$ sudo systemctl enable --now influxdb아래와 같이 influxDB에 접근하여 Database를 생성해준다.

$ influx

> create database nginx_log_db그 후 현재 Kubernetes로 블로그를 운영 중이므로 fluent-bit를 아래와 같이 설치해준다.

GitHub - fluent/fluent-bit-kubernetes-logging: Fluent Bit Kubernetes Daemonset

Fluent Bit Kubernetes Daemonset. Contribute to fluent/fluent-bit-kubernetes-logging development by creating an account on GitHub.

$ wget https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-service-account.yaml

$ wget https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-role.yaml

$ wget https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-role-binding.yaml위 3개의 파일을 다운 받고 아래와 같이 daemonset과 configmap을 작성해주자.

fluent-bit-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: fluent-bit-config

namespace: logging

labels:

k8s-app: fluent-bit

data:

# Configuration files: server, input, filters and output

# ======================================================

fluent-bit.conf: |

[SERVICE]

Flush 5

Log_Level info

Daemon off

Parsers_File parsers.conf

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port 2020

@INCLUDE input-kubernetes.conf

@INCLUDE filter-kubernetes.conf

@INCLUDE output-kafka.conf

input-kubernetes.conf: |

[INPUT]

Name tail

Tag kube.nginx.*

Path /var/log/containers/*ingress-nginx-controller*.log

Parser nginx

DB /var/log/flb_kube.db

Mem_Buf_Limit 5MB

Skip_Long_Lines On

Refresh_Interval 10

filter-kubernetes.conf: |

[FILTER]

Name kubernetes

Match kube.nginx.*

Kube_URL https://kubernetes.default.svc:443

Kube_CA_File /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Kube_Token_File /var/run/secrets/kubernetes.io/serviceaccount/token

Kube_Tag_Prefix kube.var.log.containers.

Merge_Log On

Merge_Log_Key log_processed

K8S-Logging.Parser On

K8S-Logging.Exclude Off

output-kafka.conf: |

[OUTPUT]

Name influxdb

Match kube.nginx.*

Host influxdb.dispiny.life

Port 8086

Database nginx_log_db

parsers.conf: |

[PARSER]

Name apache

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache2

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^ ]*) +\S*)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache_error

Format regex

Regex ^\[[^ ]* (?<time>[^\]]*)\] \[(?<level>[^\]]*)\](?: \[pid (?<pid>[^\]]*)\])?( \[client (?<client>[^\]]*)\])? (?<message>.*)$

[PARSER]

Name nginx

Format regex

Regex ^(?<remote>[^ ]*) (?<host>[^ ]*) (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name json

Format json

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name docker

Format json

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

[PARSER]

Name syslog

Format regex

Regex ^\<(?<pri>[0-9]+)\>(?<time>[^ ]* {1,2}[^ ]* [^ ]*) (?<host>[^ ]*) (?<ident>[a-zA-Z0-9_\/\.\-]*)(?:\[(?<pid>[0-9]+)\])?(?:[^\:]*\:)? *(?<message>.*)$

Time_Key time

Time_Format %b %d %H:%M:%S

[PARSER]

Name ghost_log

Format regex

Regex ^\[(?<timestamp>[^\]]+)\] (?<level>[A-Z]+) "(?<method>[A-Z]+) (?<path>[^\s]+)" (?<status>\d+) (?<latency>\d+)ms$

Time_Key timestamp

Time_Format %Y-%m-%d %H:%M:%S

fluent-bit-ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluent-bit

namespace: logging

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

updateStrategy:

type: RollingUpdate

selector:

matchLabels:

k8s-app: fluent-bit-logging

template:

metadata:

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "2020"

prometheus.io/path: /api/v1/metrics/prometheus

spec:

containers:

- name: fluent-bit

image: fluent/fluent-bit:1.5

imagePullPolicy: Always

ports:

- containerPort: 2020

readinessProbe:

httpGet:

path: /api/v1/metrics/prometheus

port: 2020

livenessProbe:

httpGet:

path: /

port: 2020

resources:

requests:

cpu: 5m

memory: 10Mi

limits:

cpu: 50m

memory: 60Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: fluent-bit-config

mountPath: /fluent-bit/etc/

terminationGracePeriodSeconds: 10

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: fluent-bit-config

configMap:

name: fluent-bit-config

serviceAccountName: fluent-bit

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- operator: "Exists"

effect: "NoExecute"

- operator: "Exists"

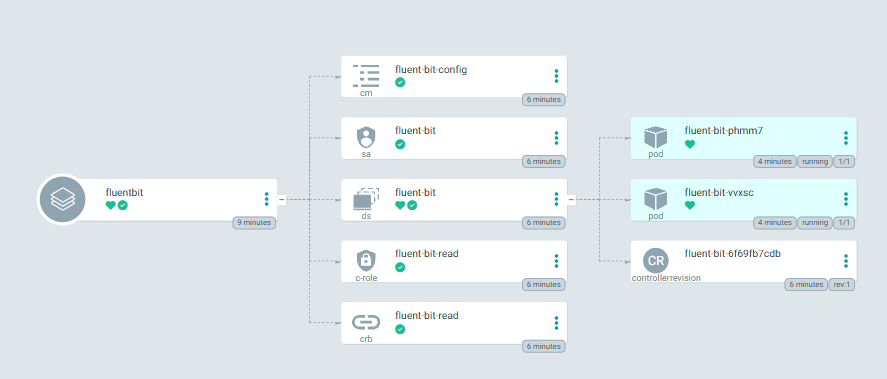

effect: "NoSchedule"그 후 argocd로 배포할 경우 아래와 같이 Application을 작성해주고, 수동으로 할 경우 kubectl로 설치해주면 된다.

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: fluentbit

namespace: argocd

spec:

destination:

name: ''

namespace: logging

server: https://kubernetes.default.svc

source:

path: fluentbit/

repoURL: https://github.com/dispiny/local-kubernetes-manifest.git

targetRevision: HEAD

sources: []

project: default

syncPolicy:

automated:

prune: false

selfHeal: false

syncOptions:

- CreateNamespace=true

- ServerSideApply=true

그 후 위와 같이 배포가 정상 적으로 끝났을 경우 influxDB에 접근 해서 아래와 같이 데이터가 잘 들어왔는지 조회해본다.

> show measurements

> select * from "kube.nginx.var.log.containers.ingress-nginx-controller-xxx-xxx_ingress-nginx_controller-xxx.log"

모니터링 구성

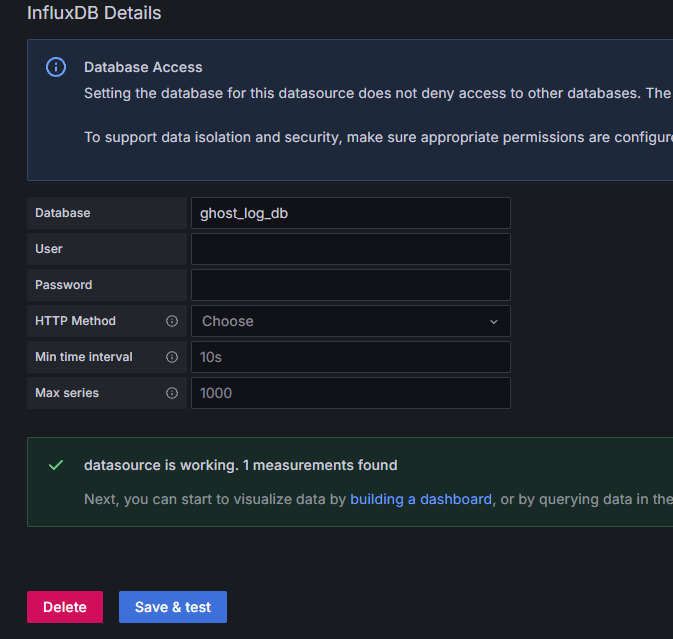

아래와 같이 Grafana에 InfluxDB를 Data source를 추가해준다.

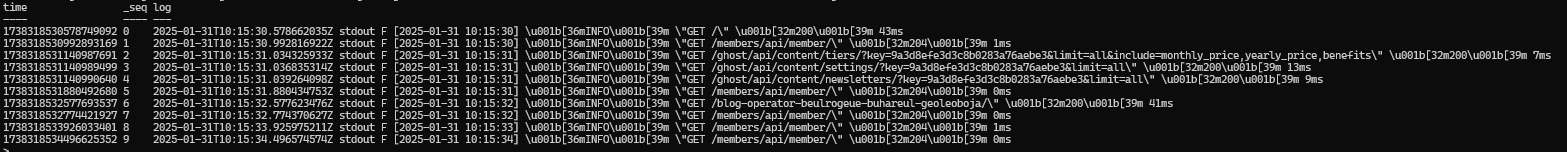

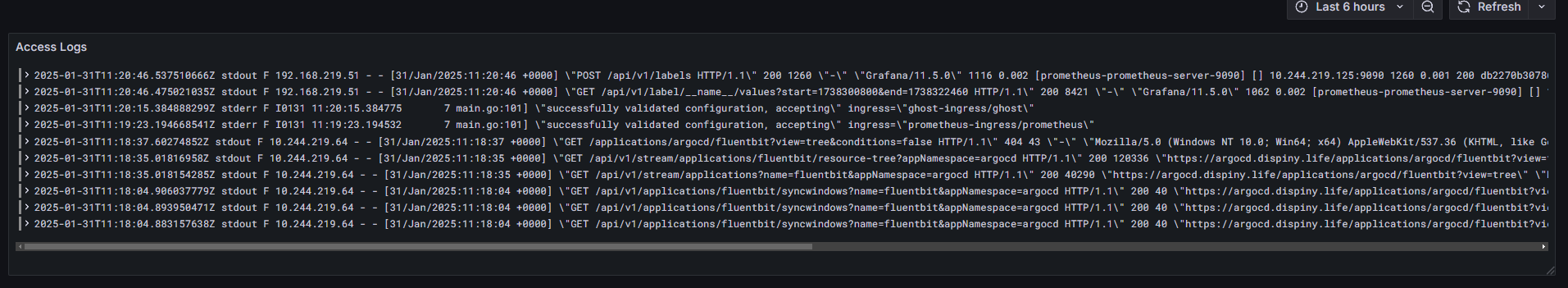

Logs 대쉬보드를 만들고 아래와 같이 Query문을 작성해서 다음과 같이 Dashboard Widget을 생성해준다.

SELECT "log" FROM "kube.nginx.var.log.containers.ingress-nginx-controller-xxxx-xxxx_ingress-nginx_controller-xxxxxx.log"

위와 같이 nginx ingress controller의 로그가 뜨는 것을 볼 수 있다.

![[Blog Operator] 블로그에 부하를 걸어보자](/content/images/size/w960/2025/01/image-16-1.png)

![[Kubernetes] Vault Secrets Operator(VSO) 활용해 Kubernetes Secrets를 유출 없이 사용하기](/content/images/size/w960/2025/02/img.jpg)